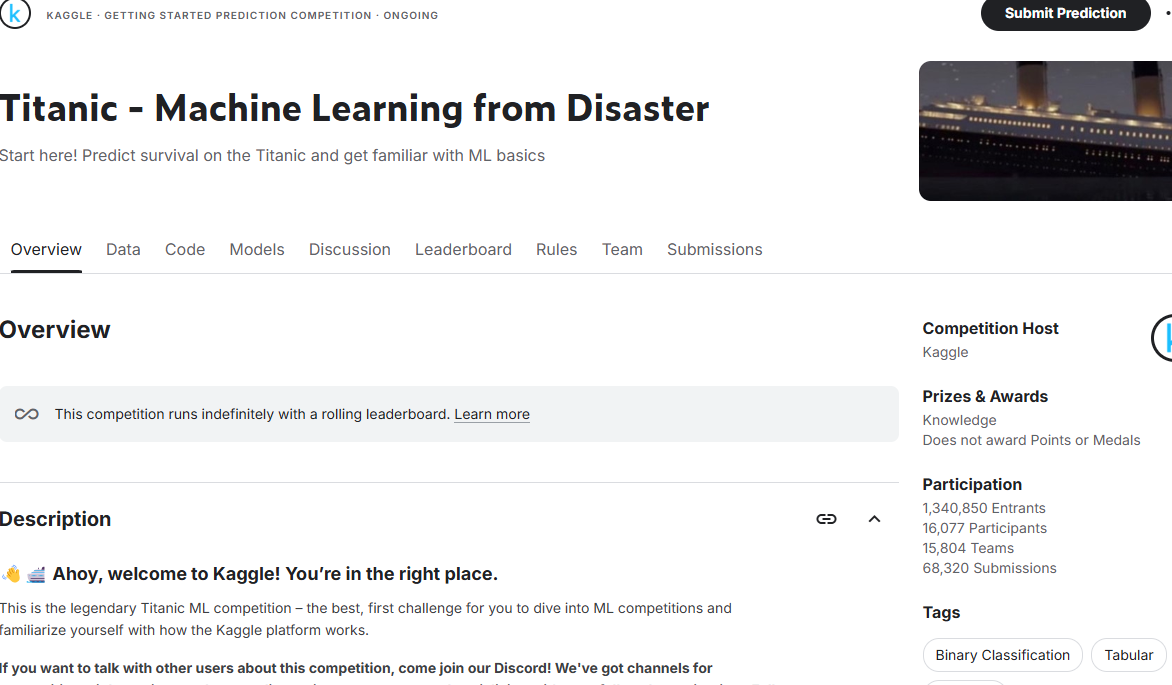

Kaggle

Titanic Problem

Task

On April 15, 1912, the RMS Titanic sank after colliding with an iceberg, resulting in the death of 1502 out of 2224 passengers and crew.

The aim of this challenge is to build a model that predicts whether a passenger on the Titanic in the test dataset survived or not based on passenger data (ie name, age, gender, socio-economic class, etc). A training dataset is provided.

Process

Upon exploring the dataset, it was observed that the "age" feature had around 21-22% missing values, "cabin" had around 80% missing values, and "embarked" and "fare" had a few missing values.

It was decided to:

- fill in the age data with the median age for that passenger's sex and class

- fill in the embarked data with research (as missing values were few)

- fill in the fare data with the median fare for that passenger's class and family size

- categorise the missing cabin data as "Missing"

Feature Engineering:

- continuous features were binned

- the family size feature was created by adding the sibling/spouse and parent/child feature values

- the ticket number feature was frequency encoded

- titles were extracted from the name feature and grouped into 5 categories

- label encoded non-numerical features and one hot encoded categorical features

- scaled the training and test data sets with the Standard Scaler in scikitlearn

Model:

- the XGBoost model was chosen as it is known to perform well for this task

- hyperparameters were tuned with Bayesian Optimisation using the HyperOpt library

- the highest accuracy achieved was 0.79

Conclusion

I learned a lot about data preprocessing and manipulation, feature engineering and feature selection, and hyperparameter tuning. I also tried out GridSearchCV for hyperparameter tuning, but Bayesian Optimisation seemed to perform better.

There is definitely heaps more to learn and lots of room for improvement! Feel free to send me any comments or questions you have about the process or the code in the Jupyter Notebook on LinkedIn - I am extremely grateful for any feedback.